A Breakthrough Against Deepfakes

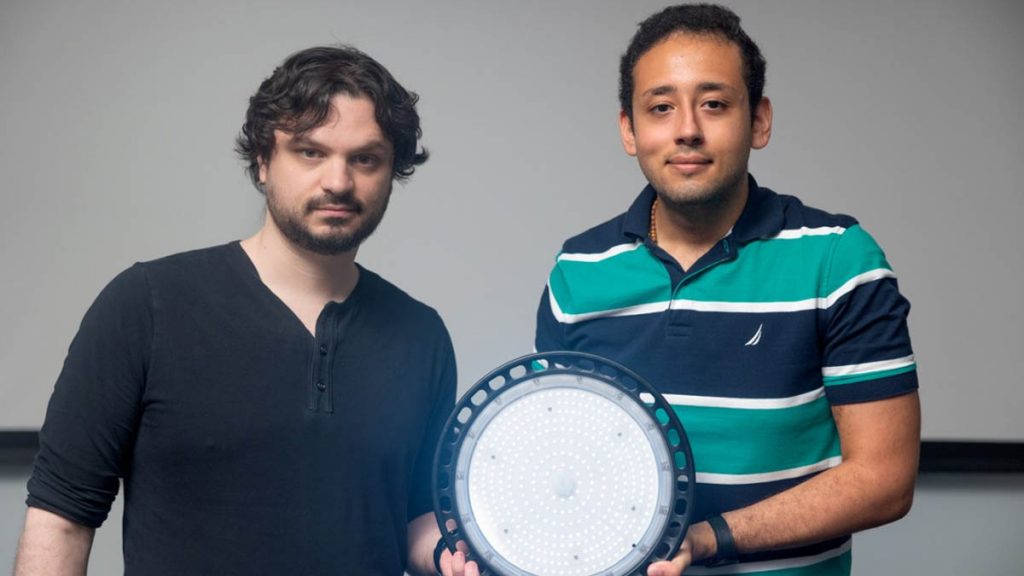

American researchers at Cornell University have introduced a groundbreaking method to fight deepfakes using a system known as noise-coded lighting. Instead of relying on traditional digital watermarks, this innovation integrates coded signals directly into light sources, making it nearly impossible for manipulated videos to pass as authentic.

How Noise-Coded Lighting Works

The method works by embedding hidden information within subtle fluctuations of light. To the human eye, these changes appear random, but in reality, each flicker encodes a low-resolution version of the original frame, complete with a timestamp. If a video is tampered with—whether through deepfake manipulation, editing, or speed alterations—the altered sections no longer align with the coded light signals, exposing the fake.

Software and Hardware Flexibility

What makes this technology highly practical is its adaptability. It can operate through software on a computer or via a small chip attached to a light source. Without a special decoding key, the embedded signals appear as nothing more than background noise. This built-in asymmetry ensures that deepfake creators cannot access the hidden data required to replicate authentic-looking manipulations.

Rigorous Testing in Real Conditions

Researchers tested the algorithm across multiple environments, including indoor and outdoor settings, variable lighting conditions, different compression levels, and camera movements. In every scenario, the light-coded data maintained its integrity, successfully detecting manipulations invisible to human eyes. Even if a counterfeiter learned the decoding method, they would still need to recreate several versions of the footage with aligned hidden codes—a nearly impossible task.

Applications Beyond Deepfake Detection

Beyond spotting manipulated videos, this technology holds promise for protecting sensitive video streams, such as surveillance footage, government recordings, and personal data. By embedding invisible light-based authentication directly into the recording environment, organizations can drastically reduce the risk of identity theft, misinformation campaigns, and unauthorized tampering.

Conclusion

As deepfakes grow more sophisticated, solutions like noise-coded lighting may become vital tools in digital security. By embedding invisible markers at the point of recording, Cornell’s innovation could redefine how authenticity is verified in the era of artificial intelligence, offering a powerful shield against digital deception.