In a world where artificial intelligence (AI) continues to reshape industries and daily life, the question of trust and safety has never been more urgent. To address this, Korean researchers are taking a bold step forward—leading the development of two major international standards designed to ensure AI systems are both safe and reliable. The Electronics and Telecommunications Research Institute (ETRI) has submitted two groundbreaking proposals to the International Organization for Standardization (ISO/IEC): AI Red Team Testing and Trustworthiness Fact Labels (TFLs). Together, they aim to create a unified global framework for evaluating AI risk, transparency, and accountability.

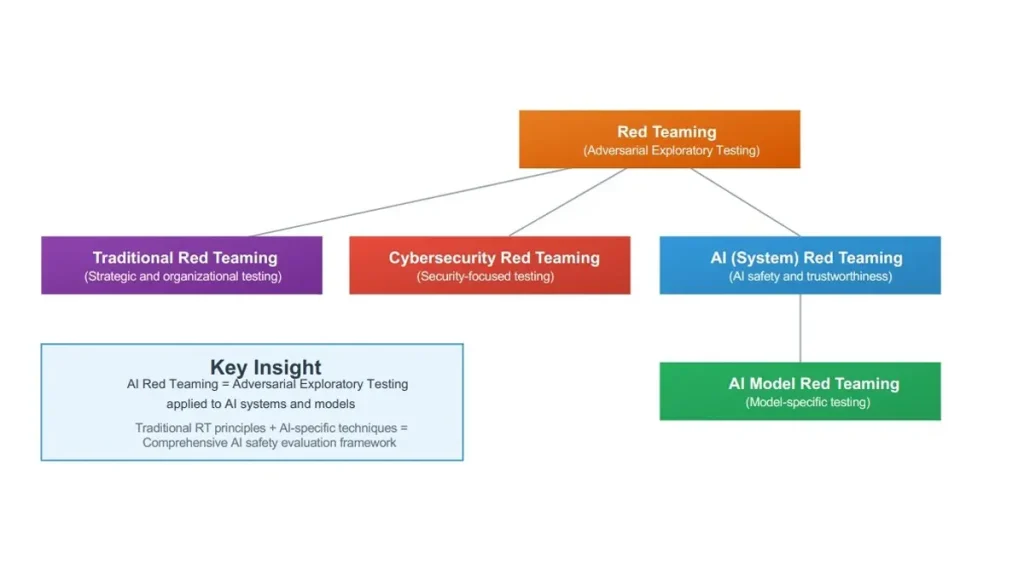

The AI Red Team Testing standard introduces a proactive approach to identifying vulnerabilities in AI systems before they can be exploited. This process mirrors cybersecurity “red teaming,” where experts simulate attacks to expose weaknesses. In AI, it means stress-testing algorithms to reveal biases, misinformation risks, and potential misuse. For example, researchers can simulate real-world scenarios to see how generative AI models respond to adversarial prompts or attempts to bypass ethical safeguards. ETRI is currently leading this work as the editor of ISO/IEC 42119-7, which will establish international test procedures applicable across sectors—from healthcare and finance to national defense.

ETRI’s leadership extends beyond the lab. In partnership with Korea’s Ministry of Food and Drug Safety, the institute hosted Asia’s first-ever AI Red Team Challenge and Technology Workshop for advanced medical devices in Seoul. The event brought together medical professionals, data scientists, and cybersecurity experts to test AI medical products for bias and reliability. Additionally, ETRI is collaborating with Seoul Asan Medical Center to develop a medical-specific red team evaluation methodology and test framework for digital medical devices powered by AI. These efforts are reinforced by partnerships with major Korean tech players like NAVER, KT, LG AI Research, STA, Upstage, and SelectStar, forming a coalition to drive AI safety and global standardization.

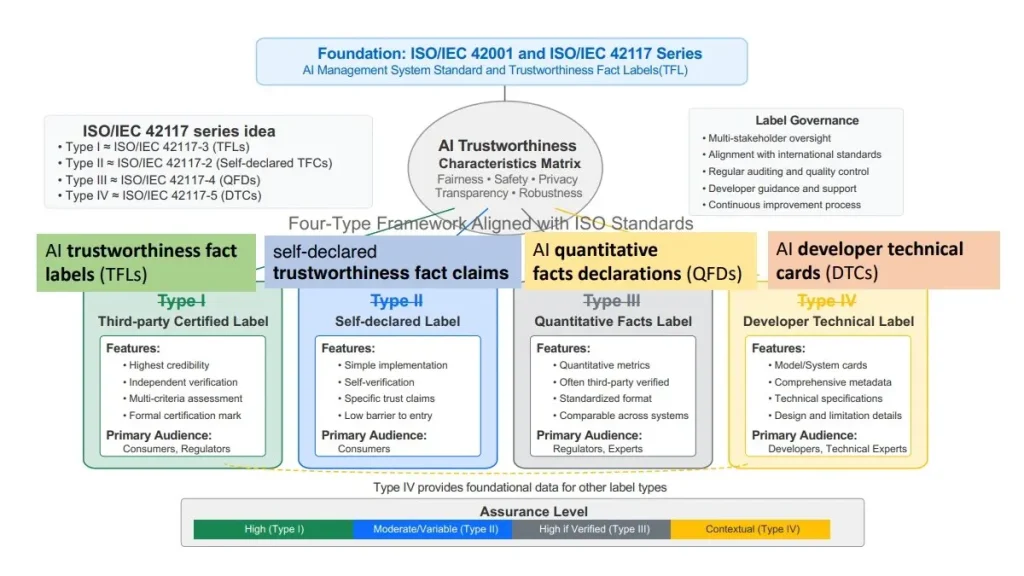

The second initiative, Trustworthiness Fact Labels (TFLs), is designed to give users transparent information about an AI system’s reliability—similar to nutrition labels on food packaging. These labels visually display metrics such as accuracy, bias detection, data integrity, and carbon footprint. ETRI is spearheading development of the ISO/IEC 42117 standard for TFLs, allowing AI developers or certified third parties to verify and publish the trust levels of AI applications. This innovation not only promotes accountability but also empowers consumers to make informed decisions about the AI tools they use daily.

In the broader global context, these standards align with Korea’s Sovereign AI strategy and the AI G3 Leapfrog Initiative, which aim to position the country as a leader in both AI development and ethical governance. Just as the U.S. National Institute of Standards and Technology (NIST) advances America’s AI safety framework, ETRI seeks to establish Korea as a central player in shaping international AI norms. The institute’s ongoing work with the AI Safety Research Institute underscores its commitment to integrating safety, transparency, and sustainability into the very foundation of AI systems.

According to Kim Wook, Program Manager at the Institute of Information & Communications Technology Planning & Evaluation (IITP), these efforts represent a critical milestone: “Providing AI safety and trustworthiness will make it easier for everyone to use AI responsibly, and leading international standards is a turning point toward becoming a country that defines AI norms.” Similarly, Lee Seung Yun, Assistant Vice President at ETRI, emphasized that “AI red team testing and trustworthiness labels are becoming key components of global AI regulations. These standards will serve as universal criteria for evaluating AI safety and reliability worldwide.”

In conclusion, Korea’s leadership in establishing AI safety and trustworthiness standards marks a pivotal shift from being a fast follower to a global first mover in responsible AI innovation. Through initiatives like AI Red Team Testing and Trustworthiness Fact Labels, ETRI is helping set the ethical and technical foundation for a safer, more transparent AI-driven future.